Why CISOs need to understand the AI tech stack – Help Net Security

Published on: 2025-06-16

Intelligence Report: Why CISOs Need to Understand the AI Tech Stack – Help Net Security

1. BLUF (Bottom Line Up Front)

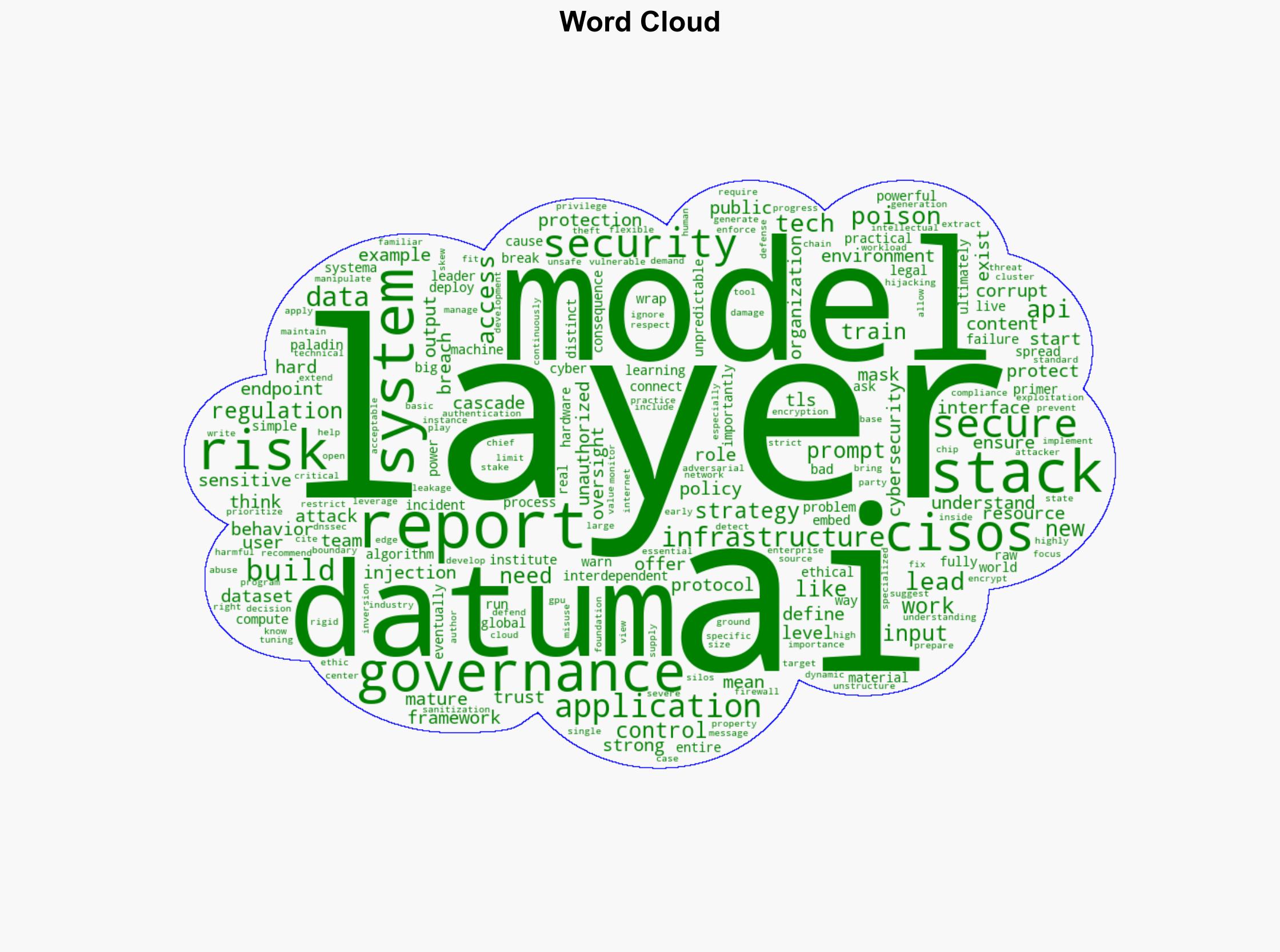

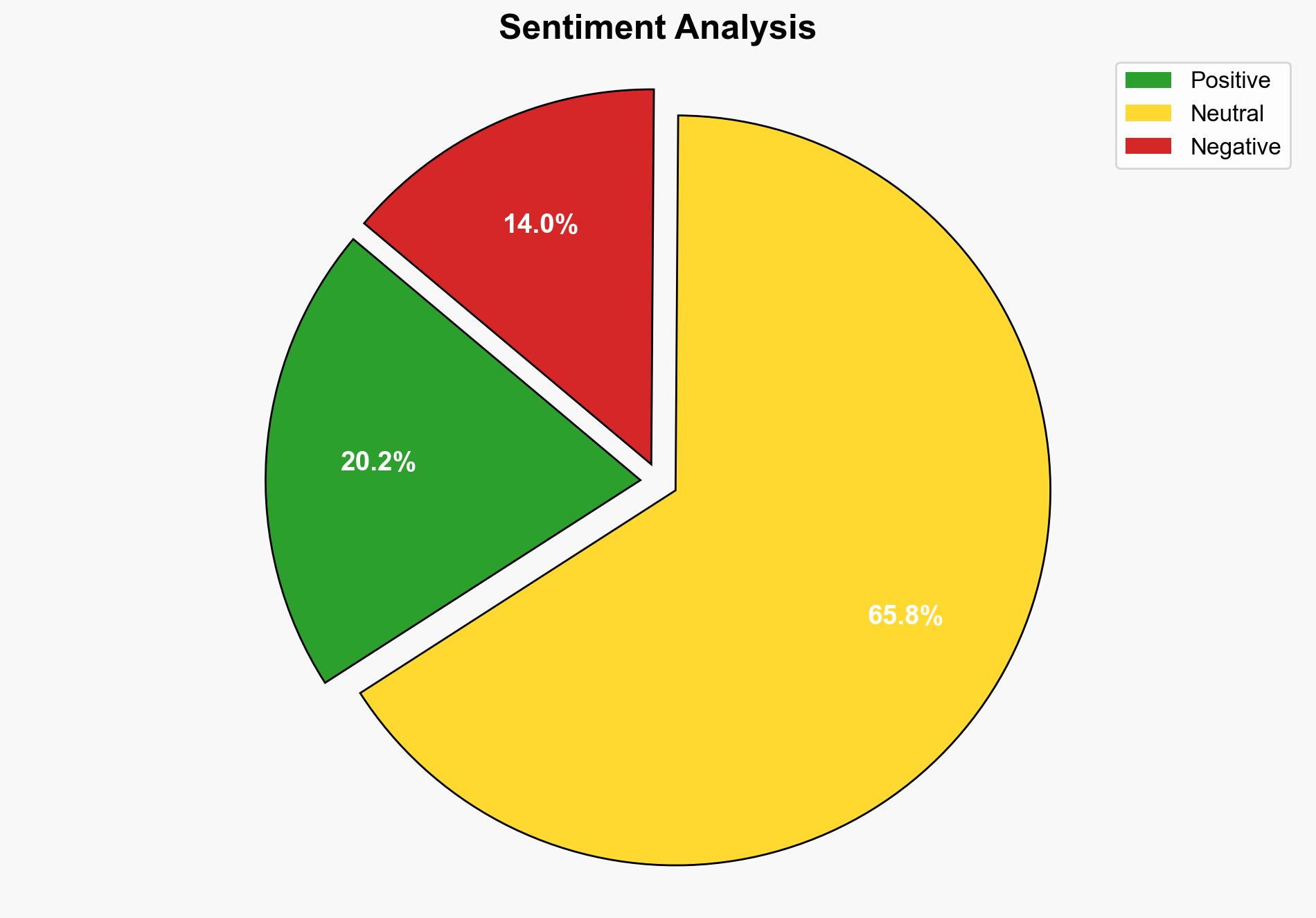

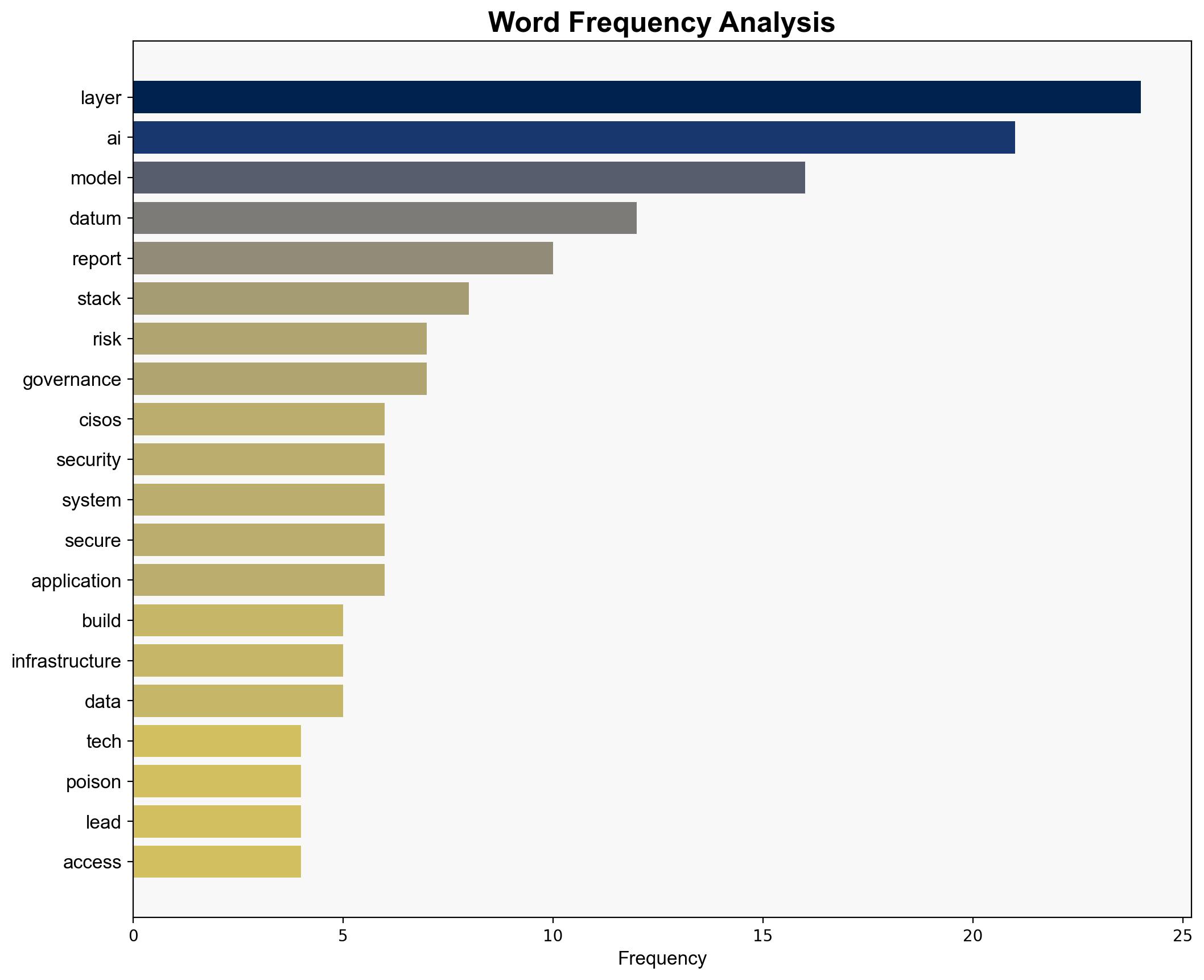

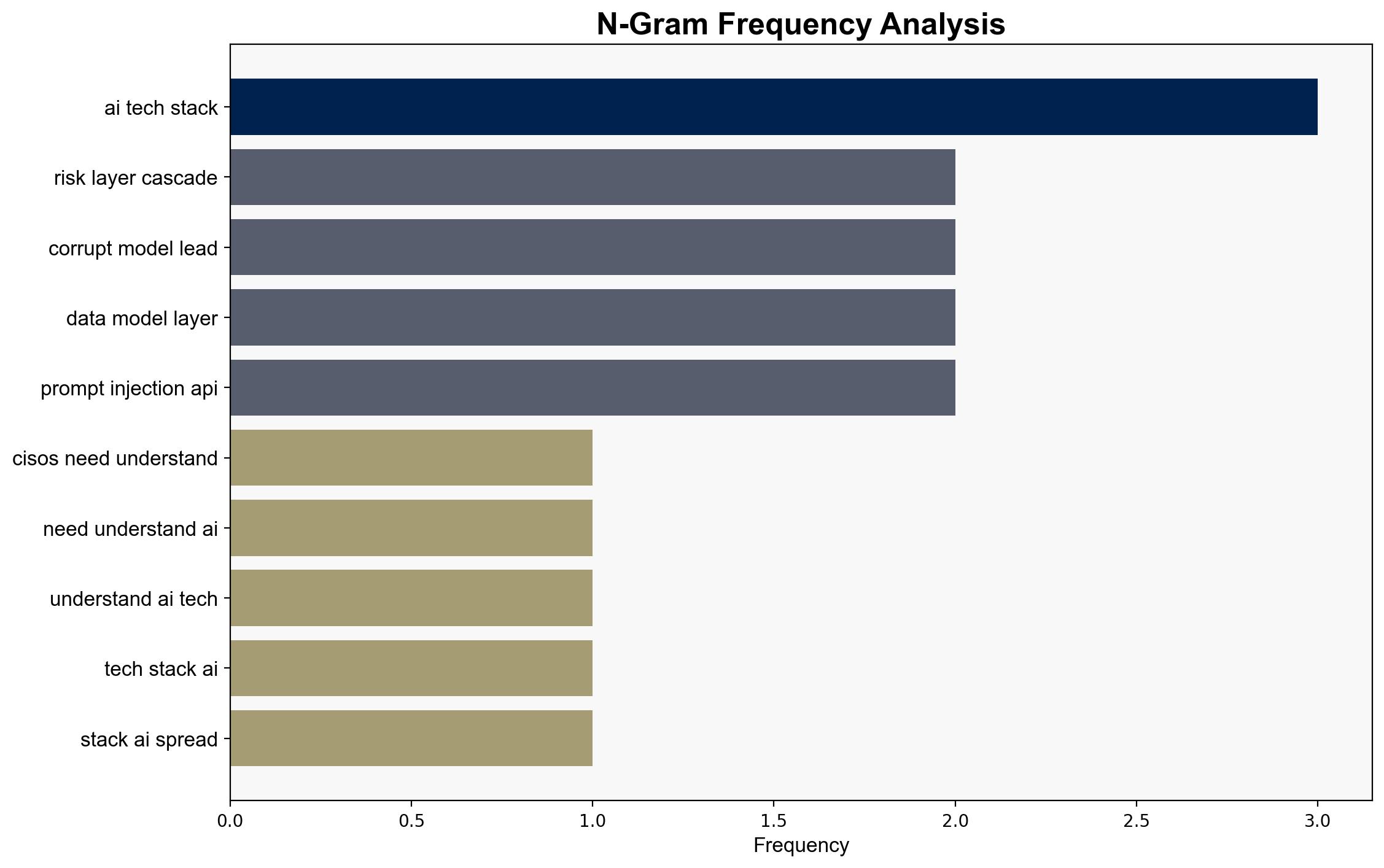

The report underscores the critical need for Chief Information Security Officers (CISOs) to comprehend the AI technology stack to effectively mitigate security risks. It highlights that understanding the distinct layers of the AI stack—data, model, infrastructure, application, and governance—is essential for embedding protection and ensuring system integrity. The report emphasizes prioritizing the data and model layers due to their foundational role and vulnerability to attacks such as data poisoning and model theft.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Adversarial Threat Simulation

Simulated hostile actions reveal vulnerabilities in the AI stack, particularly in data integrity and model security.

Indicators Development

Developed indicators to monitor anomalies in data inputs and model outputs, facilitating early threat detection.

Bayesian Scenario Modeling

Utilized probabilistic models to predict potential cyberattack pathways, focusing on cascading effects from compromised data layers.

Network Influence Mapping

Mapped influence relationships to assess the impact of various actors on AI system vulnerabilities and security policies.

3. Implications and Strategic Risks

The report identifies significant risks associated with AI system vulnerabilities, including potential public incidents stemming from corrupted models. It highlights the cascading effects of data layer breaches, which can lead to governance failures and public trust erosion. The strategic risks extend to economic and political dimensions, as AI systems increasingly influence decision-making processes.

4. Recommendations and Outlook

- Implement encryption, access controls, and data masking to secure the data layer and protect intellectual property.

- Enhance model security through input sanitization and endpoint protection to prevent adversarial attacks.

- Adopt AI-specific cybersecurity frameworks, leveraging existing practices with adjustments for AI environments.

- Scenario-based projections suggest that, in the best case, robust security measures will prevent major incidents, while the worst case involves significant breaches leading to public distrust and regulatory backlash.

5. Key Individuals and Entities

The report does not specify individuals by name but references the Paladin Global Institute as a key entity in AI tech stack analysis.

6. Thematic Tags

national security threats, cybersecurity, AI governance, data protection, risk management