Why DeepSeek is cheap at scale but expensive to run locally – Seangoedecke.com

Published on: 2025-06-01

Intelligence Report: Why DeepSeek is Cheap at Scale but Expensive to Run Locally – Seangoedecke.com

1. BLUF (Bottom Line Up Front)

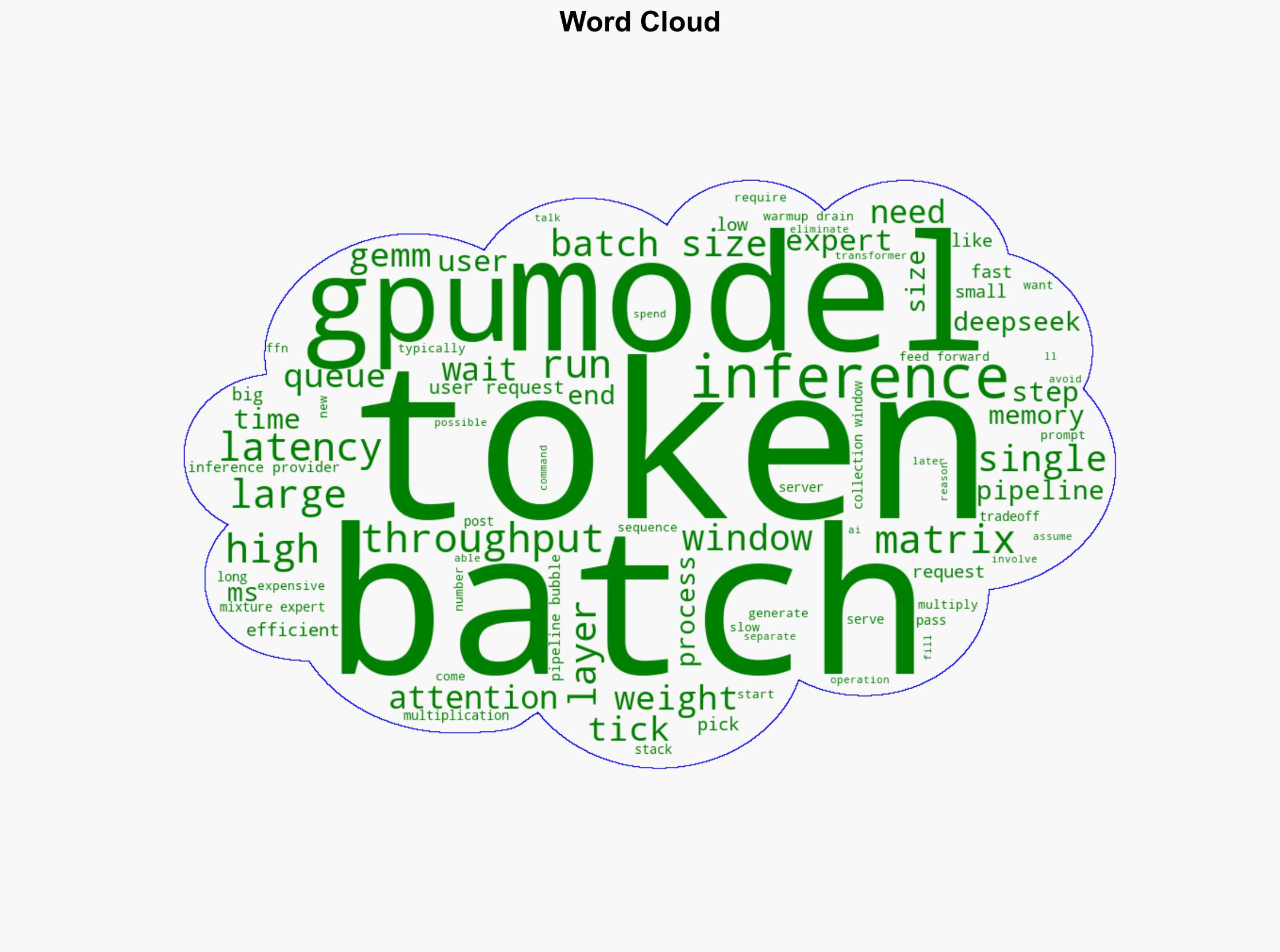

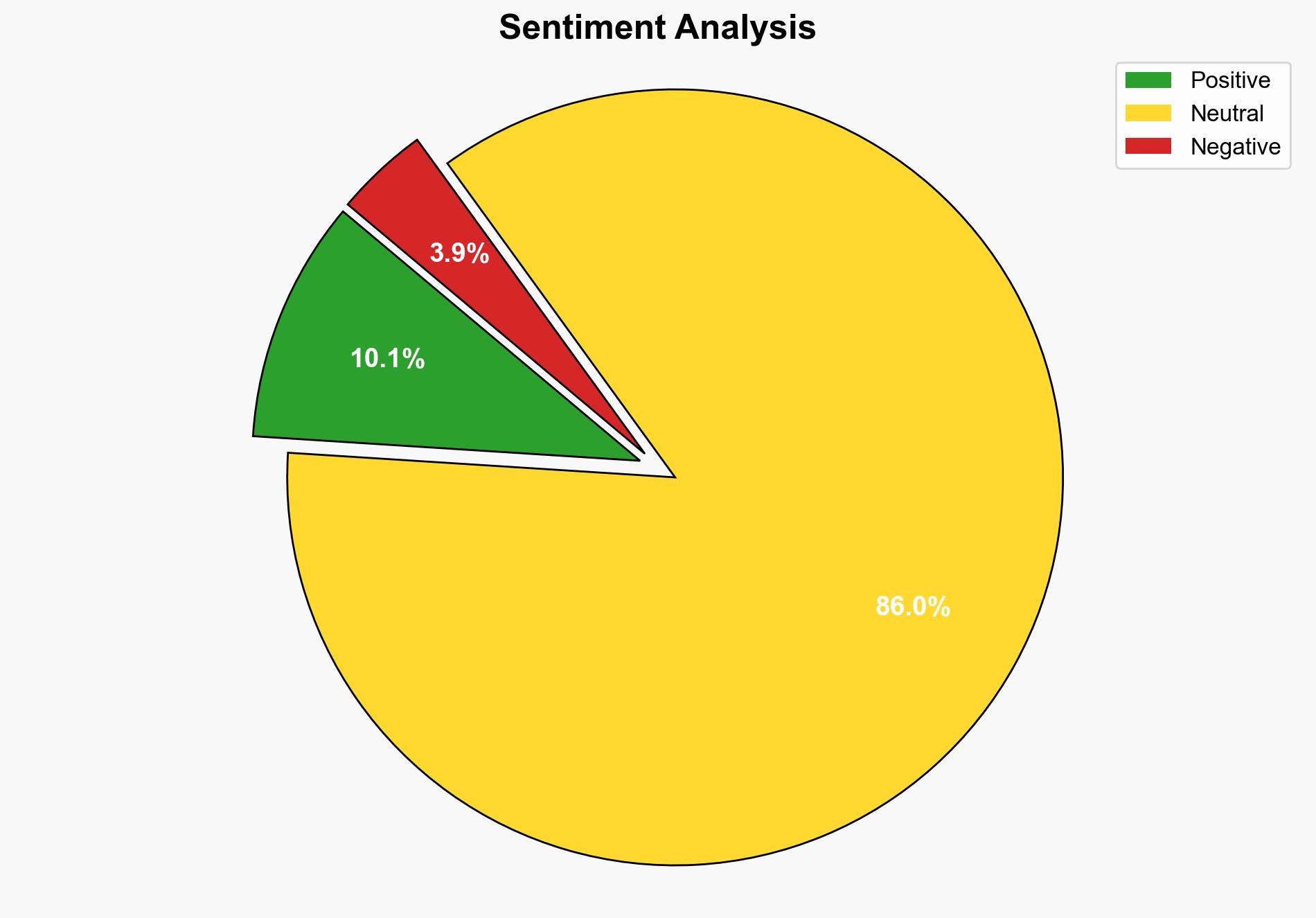

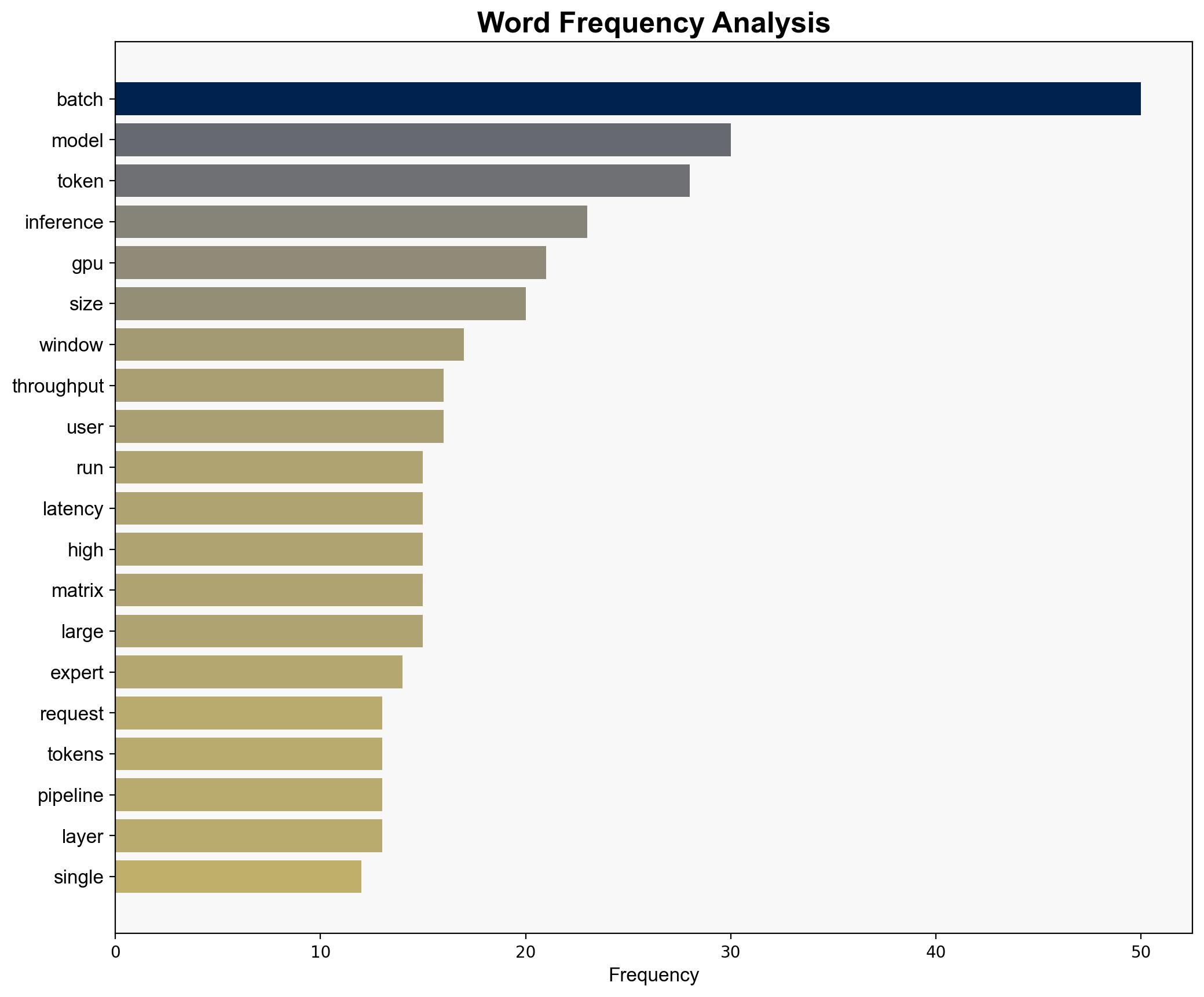

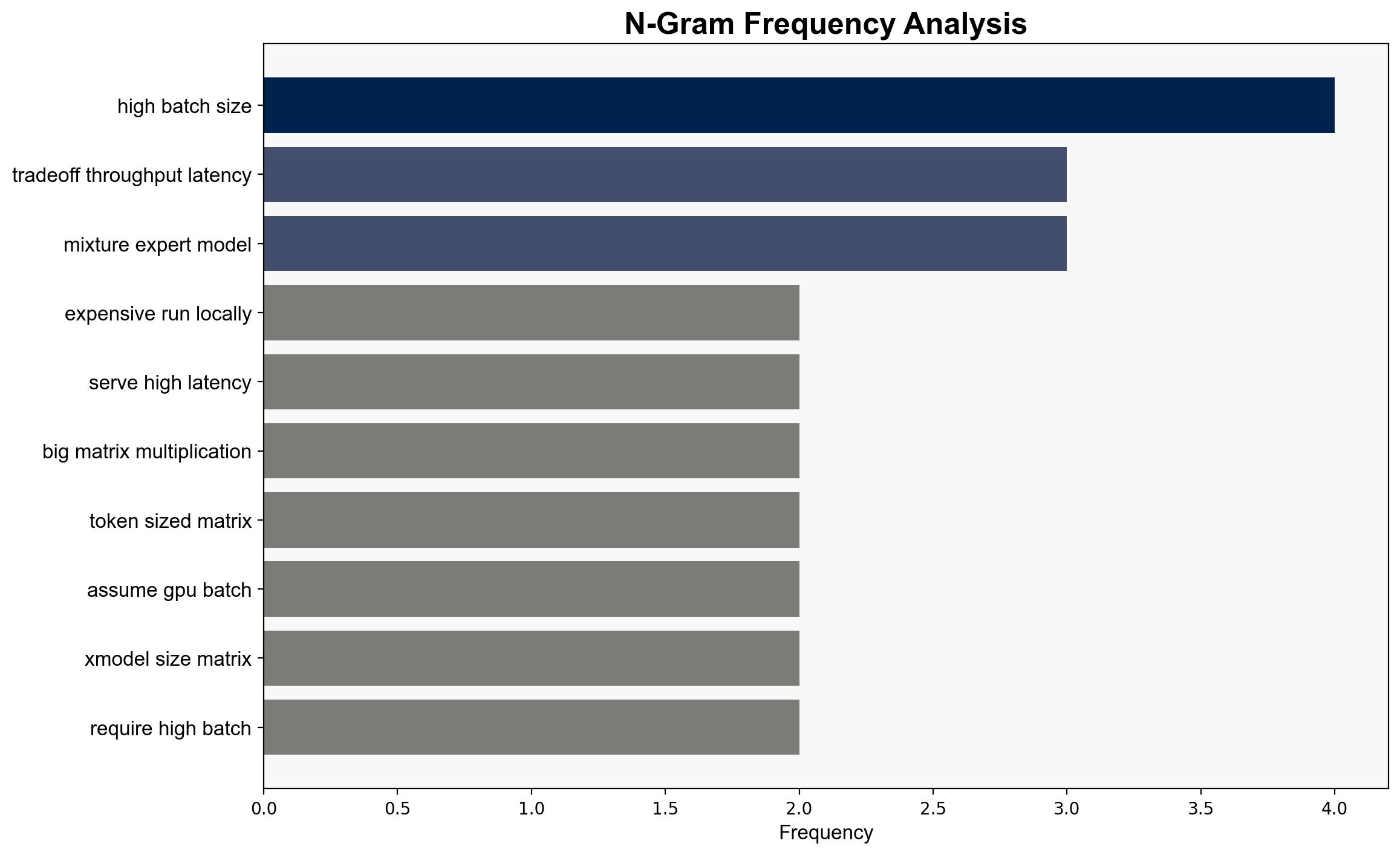

DeepSeek, a model designed for AI inference, is optimized for high-throughput, large-scale operations but becomes inefficient and costly when deployed locally. This discrepancy arises from the model’s reliance on batch processing and GPU-intensive computations, which are economically viable at scale but not in smaller, localized settings. Strategic recommendations include optimizing local deployment strategies and exploring alternative models for local use.

2. Detailed Analysis

The following structured analytic techniques have been applied to ensure methodological consistency:

Adversarial Threat Simulation

Simulations reveal that local deployments of DeepSeek are vulnerable to inefficiencies due to high latency and low throughput, potentially exposing systems to performance bottlenecks.

Indicators Development

Monitoring GPU usage and batch processing efficiency can serve as indicators of system performance and potential vulnerabilities in local deployments.

Bayesian Scenario Modeling

Probabilistic models predict that local deployments will face increased operational costs and reduced performance, impacting decision-making for localized AI applications.

Network Influence Mapping

Mapping the influence of GPU and batch processing on DeepSeek’s performance highlights the critical role of infrastructure in determining operational efficiency.

3. Implications and Strategic Risks

The inefficiency of DeepSeek in local settings poses strategic risks, including increased operational costs and potential delays in AI-driven decision-making processes. This could affect sectors reliant on real-time data processing and analysis. Additionally, the reliance on large-scale GPU infrastructure may limit flexibility and adaptability in rapidly changing environments.

4. Recommendations and Outlook

- Optimize local deployment strategies by reducing batch sizes and enhancing GPU efficiency to mitigate high latency and low throughput issues.

- Consider alternative AI models that are more suited for local operations to reduce costs and improve performance.

- In the best-case scenario, improved local deployment strategies could lead to cost savings and enhanced operational efficiency. In the worst-case scenario, continued inefficiencies could result in significant operational disruptions.

5. Key Individuals and Entities

No specific individuals are mentioned in the source text. The focus remains on the technical and strategic aspects of DeepSeek’s deployment.

6. Thematic Tags

AI deployment strategies, operational efficiency, GPU optimization, local vs. scale deployment